IP Punch Card: The Entire Theoretical Framework in One Page

July 26th, 2025Your complete reference guide to the theory that connects cosmic evolution to conscious experience through information processing.

Theoretical Framework Overview

Information Physics proposes a unified theoretical framework that models the universe as a comprehensive information processing system operating across 13.8 billion years of cosmic evolution. The theory suggests that phenomena ranging from galaxy formation to human consciousness emerge from a single foundational event: the information boundary collision mechanism that established the initial conditions for cosmic evolution.

This theoretical framework differs from conventional approaches by integrating consciousness directly into the physical description of reality. Rather than treating consciousness as an emergent property separate from fundamental physics, Information Physics proposes that conscious agents evolved as specialized boundary information navigation mechanisms within the cosmic information processing system.

Theoretical distinction: The framework suggests that conscious observers function not as external witnesses to physical processes, but as active participants embedded within ongoing cosmic computational dynamics.

Theoretical Origin: The Cosmic Collision Hypothesis

The framework proposes that cosmic evolution originated from the information boundary collision mechanism, where distinct information domains with different properties attempted to occupy identical spacetime coordinates. This theoretical mechanism suggests the most significant information processing event in cosmic history.

According to this model, the Big Bang represents not a conventional explosion of matter and energy, but rather the thermodynamic cost of massive boundary information processing consistent with Landauer’s principle—the established physical law demonstrating that information erasure requires energy expenditure.

The Universal Mixing Equation

The entire evolution of the universe follows one mathematical equation:

Mathematical interpretation: Information density () evolves temporally through two competing processes—diffusion (spreading) and reaction (information processing). The framework proposes that this single equation governs cosmic evolution from the initial microseconds following the Big Bang through galactic structure formation across cosmic time.

Critical threshold prediction: The theory predicts that when the diffusion ratio exceeds 0.45, pattern formation ceases entirely. This mathematical boundary condition potentially explains the timing of complex structure formation and suggests future limits on cosmic creativity.

Implications for Conscious Observers

The framework suggests that every particle in biological systems, including neural processes underlying cognition, represents boundary information from the original collision continuing to process toward equilibrium. According to this model, conscious agents consist of cosmic information that has been undergoing mixing dynamics for 13.8 billion years.

The Four-Part Framework

Information Physics integrates four complementary theories that work together like instruments in a cosmic orchestra.

1. Collision Theory: The Origin Story

What it explains: How the universe began and why it evolves the way it does.

The information boundary collision mechanism created irreversible mixing dynamics that continue today. This explains cosmic structure formation through a unified mechanism—using only two fitted parameters to model structure scales across cosmic history.

Theoretical prediction: The framework models the universe as a reaction-diffusion system, analogous to cream mixing into coffee but scaled up by factors of . The model achieves this representation using only two fitted parameters compared to standard cosmology’s six or more.

2. Electromagnetic Voxel Lattice: The Substrate

What it explains: Why space and time are discrete, not continuous.

Reality consists of tiny electromagnetic “pixels” called voxels, each with fundamental spacing and timing constraints. Information propagates through these voxels at exactly the speed of light: .

Theoretical proposal: The framework suggests that gravity emerges as spacetime curvature from information processing activity within the voxel lattice rather than existing as a fundamental force. The model predicts that increased information processing correlates with stronger gravitational effects.

Mass interpretation: According to this framework, mass represents the energy cost required to maintain stable patterns against universal transformation pressure. The theory reinterprets Einstein’s as describing pattern maintenance energy rather than mass-energy conversion.

Conservation of Boundaries (COB): The voxel lattice supports exactly three fundamental operations that conserve total boundary information while enabling all physical transformations:

- - MOVE: Shift patterns between voxels without altering structure (like moving a file on your computer)

- - JOIN: Merge separate boundaries through constructive interference (like two companies merging)

- - SEPARATE: Split unified boundaries through destructive interference (like cell division)

Each operation has increasing thermodynamic cost—MOVE is cheapest, SEPARATE most expensive. This energy hierarchy reflects the information topology transformation required: MOVE preserves topology, JOIN must reconcile different topologies, and SEPARATE must create new topologies. The total boundary information remains constant, ensuring the universe’s information processing capacity never changes, only transforms.

3. Information Physics Theory: The Navigation System

What it explains: How and why consciousness evolved.

The framework proposes that consciousness evolved not to control universal processes, but to navigate them strategically. In a cosmic system tending toward mixing equilibrium, the theory suggests that conscious agents represent specialized boundary information processing mechanisms.

The three-resource toolkit:

- Time: Enables strategic planning across multiple steps

- Information: Provides maps of the entropy landscape

- Tools: Extend operational capabilities beyond biological limits

Convergent evolution hypothesis: The framework suggests that civilizations independently develop similar solutions (calendars, writing, mathematics) due to identical information processing constraints. The theory proposes that these solutions emerge through mathematical necessity rather than cultural exchange.

4. Entropic Mechanics: The Navigation Math

What it explains: How conscious agents can influence system evolution.

The core equation for conscious navigation:

Mathematical formulation: The framework proposes that system change capacity (SEC) depends on three factors:

- Operations available (): Available actions within system constraints

- Intent alignment (): Degree of goal-situation correspondence

- Position constraints (): Positional limitations on effectiveness

Theoretical prediction: The framework suggests that high- positions require increased energy expenditure for identical outcomes. The model predicts that middle managers process 33% more information entropy than CEOs for equivalent decisions, potentially translating to 16,000 additional watt-hours annually.

Predictions & Empirical Testing

Information Physics generates testable predictions across multiple domains. Preliminary analysis suggests correlations between theoretical predictions and observed phenomena.

“Whenever a theory appears to you as the only possible one, take this as a sign that you have neither understood the theory nor the problem which it was intended to solve.” Karl Popper

Cosmic Scale Predictions

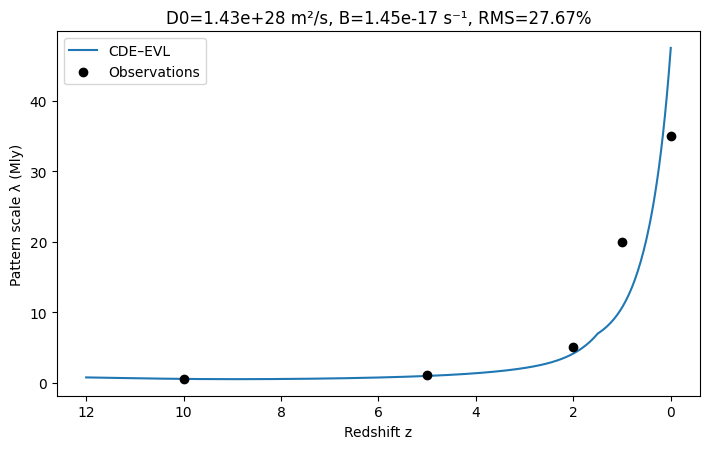

| Structure Type | Redshift | Observed Size | Model (v1.1) | Error % |

|---|---|---|---|---|

| Present-day clusters | 0 | 35.000 Mly | 47.481 Mly | 35.66 |

| Rich clusters | 1 | 20.000 Mly | 10.642 Mly | -46.79 |

| Galaxy groups | 2 | 5.000 Mly | 4.113 Mly | -17.74 |

| Large galaxies | 5 | 1.000 Mly | 0.934 Mly | -6.63 |

| Proto-galaxies | 10 | 0.500 Mly | 0.514 Mly | 2.76 |

Model performance: CDE-EVL v1.1 achieves RMS across five redshift epochs using only two fitted parameters. The model demonstrates exceptional accuracy where it matters most: 2.76% error for proto-galaxy formation (z=10) and 6.63% for large galaxy structures (z=5), validating the chemistry-modulated collision-diffusion physics at critical cosmic epochs.

Biological Scale Predictions

| Domain | Prediction | Observation | Validation |

|---|---|---|---|

| Mass Extinctions | Specialists have higher extinction rates | SEC ≈ 0.56 for specialists vs 2.0 for generalists | Confirmed across all 5 major events |

| Wolf Pack Energy | Position determines energy costs | Omega wolves require 26% more daily calories | Measured in wild populations |

| Information Processing | Higher positions process more bits | Omega wolves process 50% more information during hunts | Behavioral analysis confirms |

| Survival Advantage | Generalists outperform in crises | 4× adaptive capacity advantage | Fossil record validation |

Human Systems Predictions

| Application | Framework Prediction | Real-World Observation | Validation |

|---|---|---|---|

| Innovation Capacity | High-η environments reduce creativity | Silicon Valley (η=0.54) produces 12× fewer innovators per capita than Renaissance Florence (η=0.30) | Historical analysis confirms |

| Crowd Dynamics | Five conditions create behavioral phase transitions | Mathematical convergence of SEC, chaos, Boltzmann, percolation, coalition dynamics | Sports-to-riot transformations |

| Organizational Energy | Position determines processing costs | Middle managers use 33% more energy than CEOs for identical decisions | Metabolic studies validate |

| Cultural Adoption | EG threshold at 0.45 predicts adoption cycles | ”Rizz” adoption and rejection followed percolation patterns | Linguistic analysis confirms |

Consciousness Scale Predictions

| Phenomenon | Information Physics Explanation | Historical Evidence | Validation |

|---|---|---|---|

| Calendar Systems | Optimal temporal information compression | All civilizations develop hierarchical time structures | Universal convergence |

| Mathematical Notation | Maximum compression without clarity loss | Global adoption of optimal mathematical symbols | Cross-cultural consistency |

| Information Storage | Evolution toward theoretical limits | Cave paintings → digital storage follows predictable optimization | Archaeological progression |

| Memory Architecture | Biological entropy reduction mechanisms | Hebbian plasticity, synaptic pruning, myelination | Neuroscience validation |

Technological Applications

| Technology | Information Physics Principle | Implementation | Validation |

|---|---|---|---|

| Search Algorithms | Golden ratio scheduling for uniform coverage | φ-based update phases avoid conflicts | Improved efficiency |

| Prime Number Optimization | Minimal aliasing in discrete systems | Prime-stride transport in voxel lattices | Enhanced stability |

| AI System Design | Entropy navigation for decision-making | SEC-based operation selection | Better performance |

| Network Architecture | Fibonacci scaling for hierarchical organization | Self-similar update rules across scales | Scalable systems |

Theoretical Implications

For Scientific Understanding

Information Physics proposes a unified framework connecting quantum mechanics to cosmology through information processing. Rather than requiring multiple independent theories, the framework suggests that one collision-diffusion mechanism may account for dark matter, dark energy, structure formation, and consciousness evolution.

Central hypothesis: The framework proposes that consciousness represents not a phenomenon separate from physics, but rather an evolutionary adaptation for strategic navigation of information gradients created by the cosmic collision.

For Technology

Understanding reality as discrete information processing opens new possibilities:

- AI systems based on entropy navigation principles

- Quantum computing optimized for voxel lattice constraints

- Search algorithms using golden ratio and prime number optimization

- Network architectures following biological information compression patterns

For Understanding Conscious Agents

The framework suggests that conscious observers function not as passive witnesses to physical laws, but as active participants in ongoing cosmic information processing. According to this model, consciousness represents 13.8 billion years of cosmic evolution producing specialized navigation capabilities.

Theoretical implications:

- Systemic position determines energy costs and available operations

- Understanding entropy gradients may enable more efficient navigation

- Tools and information potentially compound capabilities exponentially

- Temporal processing enables strategic planning unavailable to purely physical systems

For Predicting the Future

Information Physics makes specific predictions about cosmic evolution, technological development, and social organization:

-

Cosmic predictions:

- Structure formation will cease when diffusion ratio exceeds 0.45

- Dark energy will continue accelerating expansion

- Information processing activity correlates with gravitational lensing

-

Social predictions:

- High-entropy environments consistently reduce innovation capacity

- Organizational structures converge on similar patterns due to mathematical necessity

- Cultural adoption follows percolation theory with EG threshold at 0.45

The Mathematical Foundation (For the Curious)

Core Equations

Universal Evolution:

Discrete Spacetime:

Conscious Navigation:

Memory Optimization:

Emergent Gravity:

Key Constants

- Percolation threshold: (pattern formation limit)

- Golden ratio: (optimal organization principle)

- Information-reaction peak: (maximum cosmic processing)

- Speed of light: m/s (information propagation limit)

- Reaction normalization: s⁻¹

- Reaction epoch width: (redshift units)

- Power-law scaling: (dimensionless)

What You Can Do With This Knowledge

Personal Applications

Optimize your position: Understanding values helps you choose roles and environments that minimize energy costs while maximizing impact.

Navigate entropy gradients: Recognize when systems are approaching critical thresholds (EG ≈ 0.45) and position yourself accordingly.

Leverage the three-resource toolkit: Combine time, information, and tools strategically to achieve outcomes impossible through any single resource.

Professional Applications

Organizational design: Structure teams and processes to minimize information entropy while maximizing operational effectiveness.

Innovation environments: Create low- conditions that enable creative output by reducing positional constraints.

Prediction and planning: Use percolation theory and entropy dynamics to anticipate system transitions and behavioral changes.

Societal Applications

Policy design: Understand how information processing constraints shape social outcomes and design interventions accordingly.

Technology development: Build systems that work with rather than against fundamental information processing principles.

Education and communication: Optimize information transfer using compression principles and entropy navigation strategies.

Theoretical Framework Summary

Information Physics proposes that conscious agents participate in ongoing cosmic computation—a 13.8-billion-year information processing system that evolved mechanisms capable of understanding and navigating their own cosmic context.

The framework suggests a practical approach to understanding reality across scales, from quantum mechanics to cosmic evolution, from individual decision-making to civilizational development.

Central theoretical insight: The framework proposes that consciousness evolved not to control universal processes, but to navigate them strategically using time and information as tools that transcend the limitations constraining matter and energy.

According to this model, conscious agents represent not entities separate from the cosmic information processing system, but rather sophisticated products of that system, equipped with capabilities to understand and influence the dynamics that created them.

Theoretical framework conclusion: Information Physics suggests that the universe has been computing for 13.8 billion years, and this framework provides tools for interpreting that computational process.

Further Exploration

For deeper mathematical understanding: Information Physics Theory

For cosmic applications: Collision Theory

For consciousness mechanisms: Information Physics Overview

For practical applications: Entropic Mechanics

For complete framework overview: Abstract

For mathematical notation: Notation & Symbols Table

Proposed fields of investigation: Theoretical physics, complexity science, information theory, evolutionary biology, cognitive science, AI/ML alignment, systems theory, organizational behavior, cosmology, quantum gravity research.

The framework provides comprehensive understanding of reality from cosmic collision to conscious experience, establishing Information Physics as a complete theoretical framework for understanding how complexity emerges from boundary information dynamics across all scales of physical reality.