Measuring Context Pollution in AI Workflows

July 19th, 2025We know that leaky prompts create context pollution that systematically degrades AI performance over time. But knowing a problem exists and being able to measure it are two different challenges. How do you quantify the moment when a focused AI conversation starts to drift? How do you detect semantic misalignment before it becomes a user experience problem?

The answer lies in measuring context pollution—a diagnostic signal that captures the growing semantic distance between your original intent and where the AI conversation has actually gone. This isn’t just another metric; it’s a way to make context pollution visible, measurable, and actionable.

Defining Context Pollution

Context pollution is the measurable distance between original intent and current direction, created by the natural entropy of complex interactions.

Formula

Definition

- CP = Context Pollution (range: 0 to 1, higher is worse)

- S(anchor, current) = Cosine similarity between the original task intent embedding and the current working context embedding

A similarity score of 1.0 means perfect alignment with the original task. As that score drops, the Context Pollution grows, signaling increasing semantic divergence.

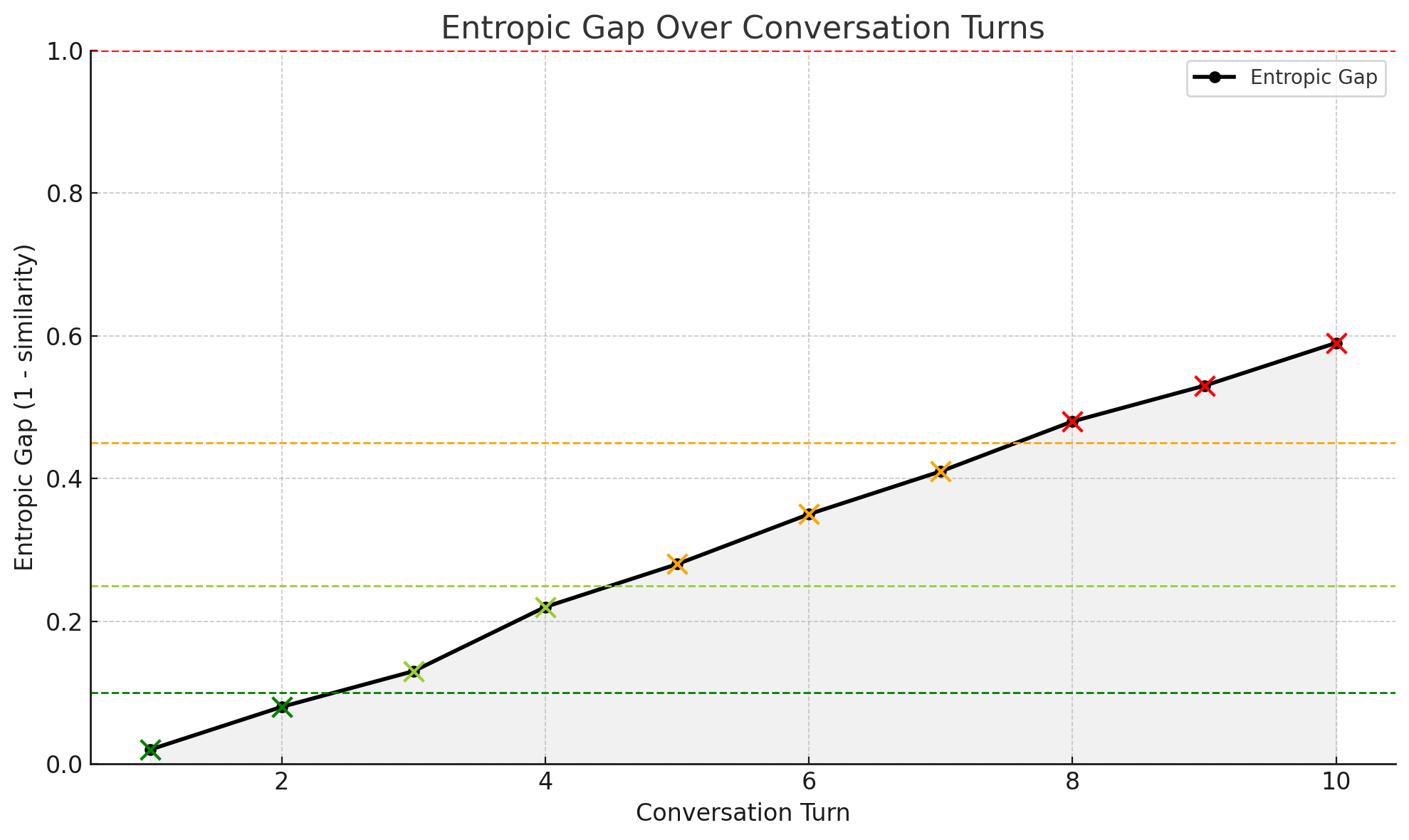

Risk Interpretation

Understanding when context pollution becomes actionable requires clear thresholds that translate abstract similarity scores into concrete operational decisions. These risk levels provide systematic guidelines for when to intervene in AI workflows before performance degradation becomes user-facing.

| Context Pollution | Risk Level | Interpretation |

|---|---|---|

| < 0.10 | Aligned | Task intent and active context are strongly aligned. No corrective action needed. |

| 0.10–0.25 | Mild Drift | Minor drift detected. Performance quality may begin to degrade. Review for subtle misalignment. |

| 0.25–0.45 | Noticeable | Significant drift from the original task. AI responses may be off-topic or underperforming. |

| > 0.45 | High Risk | Severe misalignment. System is likely optimizing against outdated or polluted context. Re-anchoring required. |

These thresholds enable proactive intervention rather than reactive damage control, allowing systems to maintain alignment before users notice degraded performance.

Deep Research Workflow Example

To see how context pollution measurement works in practice, we can trace through a systematic workflow where drift detection and re-anchoring are built into the process itself.

Consider an AI research assistant helping with a complex analysis project:

- Initial context gathering: AI gathers background on the research topic, identifies key search parameters, and proposes a research plan

- Anchor creation: Once the user agrees the agent has the correct plan, that context and task definition is stored as the anchor embedding

- Research execution: Agent performs searches and begins building a comprehensive report

- Extended interaction: User digs into specific sources, asks follow-up questions, and requests clarifications over multiple turns T(x)

- Drift detection: AI periodically compares current context against the anchor embedding to check for semantic drift

- Threshold decision:

- If drift < acceptable threshold (e.g., 0.25): Agent continues with current trajectory

- If drift > acceptable threshold: Agent initiates clarification sub-loop to realign task and context

- Re-anchoring: On user acceptance of clarifications, agent creates a new anchor embedding

- Workflow continuation: Research continues with updated alignment reference

While the visualization refers to Entropic Gap (

EG)—the underlying mathematics—the same principles apply to Context Pollution (CP).

This systematic approach prevents research scope creep and ensures the final deliverable matches the original intent, even through dozens of interactions. Additionally, the re-anchoring process naturally manages context window limits—when the system creates a new anchor, it can prune outdated context and irrelevant conversation history, avoiding the truncation issues that plague today’s long-running AI workflows. This means research sessions can continue indefinitely without degrading due to either semantic drift or technical limitations.

Causes of Drift and Decay

Leaky prompts are not bugs—they are predictable outputs of unstructured conversations. The gap emerges from two core dynamics:

- Task drift: The original objective loses clarity as user instructions evolve without reaffirmation.

- Contextual noise: Corrections, side discussions, and metadata accumulate and distort the working memory.

Unlike humans, who can filter and prioritize context, LLMs treat all context as equally relevant unless instructed otherwise. As a result, they often overfit to noise, optimize for the wrong task, or respond to outdated goals.

For a deeper exploration of why these failure modes emerge, see Leaky Prompts: How Context Pollution Degrades AI Performance—that article explains the phenomenon, while this one shows you how to measure and manage it.

Understanding these causes is crucial, but measuring drift becomes even more powerful when combined with other system signals.

Connecting Drift to System Failures

Measuring context pollution provides a quantitative signal, but it becomes even more effective when paired with qualitative evaluations. Most AI systems already log test failures, unexpected outputs, or annotated breakdowns. By comparing these logs with context pollution measurements, you can start to map how semantic drift contributes to downstream failure.

Building a Hybrid Diagnostic Model

Effective drift detection requires combining multiple data sources into a unified analytical framework. Rather than relying solely on semantic similarity scores, successful systems integrate quantitative measurements with operational outcomes. This approach creates a diagnostic framework that operates on three key components:

- Drift curve: how far the system has moved from original intent

- Eval outcome: whether the task succeeded, failed, or degraded

- Interaction pattern: where and when breakdown occurred in the session

When these three signals align, you can trace system failures back to specific moments of semantic divergence, creating a clear causal chain from drift to breakdown.

Common Failure Patterns

Different types of semantic drift produce distinct failure signatures that emerge consistently across AI workflows. By cataloging these patterns, you can build predictive models that anticipate breakdown before it becomes user-facing. Analysis of context pollution data reveals several recurring failure modes:

- Sharp drift early in a session often results in task confusion or tool misfire

- Gradual drift over time may lead to vague, repetitive, or hallucinated responses

- Low drift but high failure indicates a different cause—likely memory constraints, tool errors, or retrieval failures

These patterns form the foundation for automated intervention systems that can recognize drift signatures and trigger appropriate corrective measures.

Operational Benefits

The real value of drift measurement emerges when you can act on the insights systematically. When you connect context pollution data to known failure points, you can classify failure modes by shape and trajectory. This classification enables several critical operational capabilities:

- Drift signature mapping: clustering different types of decay into recognizable patterns

- Root cause visibility: identifying which type of drift leads to which kind of breakdown

- Early intervention design: triggering recovery mechanisms before user-visible errors occur

By treating drift as a system-wide variable—not just a user experience detail—you create a full observability layer around alignment. You’re no longer asking “what went wrong,” but “when did it start, how far did it go, and what did the system try to do before it broke?” This level of diagnostic precision transforms AI debugging from reactive troubleshooting into proactive system design.

Once you can reliably detect and categorize drift patterns, the next step is building systems that actively counteract them.

Strategies to Track and Control the Gap

When you identify Context Pollution as a key failure mode, you can design systems that measure and counteract it. Effective mitigation requires combining detection mechanisms with active intervention strategies.

The most successful approaches treat drift prevention as an architectural principle rather than a post-hoc debugging tool. Consider these proven mitigation techniques:

- Anchor reference embeddings: Save a vector representation of the original task to continuously compare against the evolving context.

- Drift visualizations: Plot the Context Pollution over time to reveal when and how semantic misalignment occurs.

- Context pruning: Remove irrelevant, outdated, or conflicting turns from the prompt history.

- Task reinforcement: Reiterate the task objective every few turns or whenever drift exceeds a certain threshold.

- Reset triggers: If CP exceeds 0.45, initiate re-anchoring prompts or system-initiated clarifications.

Each of these strategies addresses a specific failure pattern and allows your AI system to regain alignment proactively. The key is implementing them as systematic processes rather than manual interventions, creating self-correcting workflows that maintain task coherence automatically.

How AI Can Help Close the Gap

As LLMs improve, they won’t just suffer from drift—they’ll help detect it. If an AI can compute its own Context Pollution, it can:

- Detect when a user has unintentionally changed direction

- Suggest clarification or re-anchoring strategies

- Visually signal drift in a UI layer

Rather than replacing the human as decision-maker, the AI becomes a partner in coherence. It holds onto the thread even when the conversation wanders.

This completely changes the dynamic: instead of humans keeping AI on task, AI helps humans stay aligned with their own goals.

Why It Matters Now

As agents become embedded in multi-step workflows and long conversations, the ability to maintain task coherence becomes critical. A 2% misalignment early in the chain can create a 40% failure rate by the end.

Context Pollution gives you a way to detect that misalignment before it becomes a user complaint. It lets you debug AI workflows the same way you debug latency or churn.

Conclusion

The progression from concept to implementation is clear: leaky prompts describe the failure mode, context pollution describes the technical cause, and context pollution measurement provides the measurable signal that makes intervention possible.

But measurement without action is just monitoring. The real power emerges when you build systems that can detect drift early and respond systematically. The research workflow example demonstrates how context pollution measurement transforms from diagnostic tool into architectural principle, enabling AI systems that maintain alignment across extended interactions while managing finite context windows.

With context pollution measurement, you can build systems that:

- Maintain task fidelity across long sessions

- Trigger re-anchoring when needed

- Visualize semantic decay over time

- Prevent context window truncation issues

- Enable indefinite workflow continuation

This shifts AI development from reactive troubleshooting to proactive alignment engineering. The best AI systems will not be those that start strong, they’ll be the ones that stay aligned. In an era where AI workflows are becoming longer and more complex, that capability isn’t just useful—it’s essential.